AI-Agent-API: Conversational Orchestrator

A Go service that sits in front of LiteLLM, stores every agent/session/message in MySQL, and exposes an OpenAI-style API for any product that needs structured AI conversations with tool calling.

Overview

mono/ai-agent-api is the control plane for all of my AI-powered experiences. It defines agent behavior (prompt, model, tool schema, fallback order), tracks long-running conversations, persists every message/tool call, and proxies completions through LiteLLM with centralized authentication and cost controls. Products such as Code Editor, AI Agent Admin, and Chat-GPT talk to this service instead of hitting providers directly.

Key Features

Agent Governance

- Structured Catalog: Agents live in the

agenttable with key, display name, system prompt, default/fallback models, temperature, metadata, and JSON tool definitions. - CRUD API:

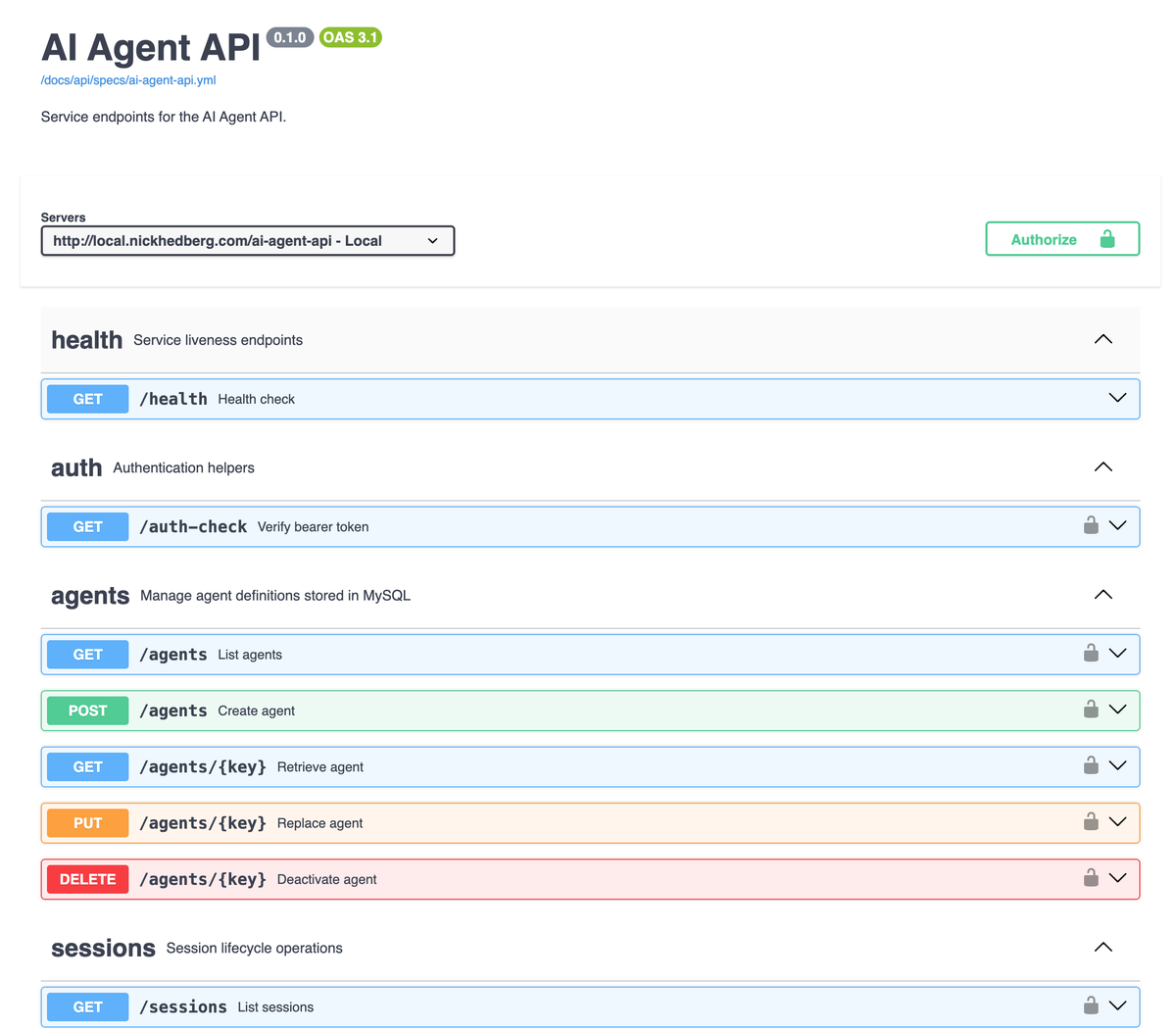

/ai-agent-api/agentssupports pagination, creation, updates, and soft deletes with cursor-based navigation and validation. - Unsupported Flags: Each response includes an

unsupported_modelsarray so downstream UIs can highlight fallback combos that no longer exist upstream.

Session & Conversation Graph

- Dual Containers: Conversations group multiple sessions over time, while sessions represent a single run tied to one agent and override settings such as allowed tools or temperature.

- Message Audit Trail: Every message stores role, model, tool call payload, metadata, and timestamps, making it easy to replay or export transcripts.

- Tool Awareness: The store persists tool_call ids and function arguments so tool responses can be reconciled even after retries.

LiteLLM Proxy & Streaming

- Unified Client: The handler translates stored history into OpenAI

chat.completionspayloads and forwards them to LiteLLM, which in turn fans out to OpenAI, Anthropic, Gemini, etc. - Streaming Support: If the request advertises

text/event-stream, the service upgrades the connection, streams LiteLLM deltas back to the caller, and simultaneously saves assistant/tool messages when the stream finishes. - Idempotent Writes: An LRU cache deduplicates

POST /sessions/{id}/messageswhen clients send anIdempotency-Key, preventing double replies on network retries.

Technical Architecture

mono/ai-agent-api/

├── handlers/ # HTTP handlers for agents, sessions, conversations, models

├── internal/

│ ├── agents/ # Repository (MySQL) + domain models

│ ├── sessions/ # Conversation store, pagination, tool call structs

│ ├── litellm/ # HTTP client wrapper

│ ├── models/ # Catalog client for supported models

│ └── idempotency/ # TTL cache

└── main.go # Router wiring + dependency injectionThe service uses Go 1.22 with the new http.ServeMux pattern, so routes look like:

mux.Handle("POST /ai-agent-api/sessions/{id}/messages",

handlers.RequireBearer(masterKey, addMessageHandler))Each handler executes three layers:

1. Auth: handlers.RequireBearer enforces the master key and short-circuits unauthorized calls.

2. Store Interaction: Reads/writes against MySQL via repositories (agents.SQLRepository, sessions.Store).

3. LiteLLM Calls: Builds openai.ChatCompletionNewParams, forwards them to LiteLLM, and persists resulting assistant/tool messages.